- Airflow scheduler not starting how to#

- Airflow scheduler not starting update#

Once you’ve built an idea of your resource usage, you might consider the following improvements: Performance monitoring and improvement should be a continuous process. Prioritize the performance aspect you want to improve and check the system for bottlenecks. You must monitor the system to capture the relevant data (you can use your existing monitoring tools). You might tolerate a 30-second delay when parsing a new DAG, or you might require near-instant parsing, which requires higher CPU usage.Ĭhoose which performance consideration is the most important and fine-tune accordingly. Deployment management involves deciding the points you want to optimize.

Airflow scheduler not starting how to#

The configuration of the scheduler-this includes the number of schedulers and parsing processes, the time interval until the scheduler re-parses the DAG, the number of Task instances processed per loop, and the DAG runs per loop, and the frequency of checks and cleanups.Īirflow offers many ways to fine-tune the scheduler’s performance, but it is your responsibility to decide how to configure it.The definition and logic of the DAG structure-this includes the number of DAG files (and the number of DAGs within the files), their size and complexity, and whether you must import many libraries to parse a DAG file.The type of deployment-this includes the type of file system that shares the DAGs, its speed, processing memory, available CPU, and networking throughput.You must consider the following factors when fine-tuning the scheduler: It runs these two operations independently and simultaneously. The Airflow scheduler continuously parses DAG files to synchronize with the database’s DAG and schedules tasks for execution.

Airflow scheduler not starting update#

This update is reflected in the Airflow scheduler. After the job completes, the worker changes the job status to its final status, typically “completed” or “failed”.When a Task is removed from the queue, it transitions to “execution” status.

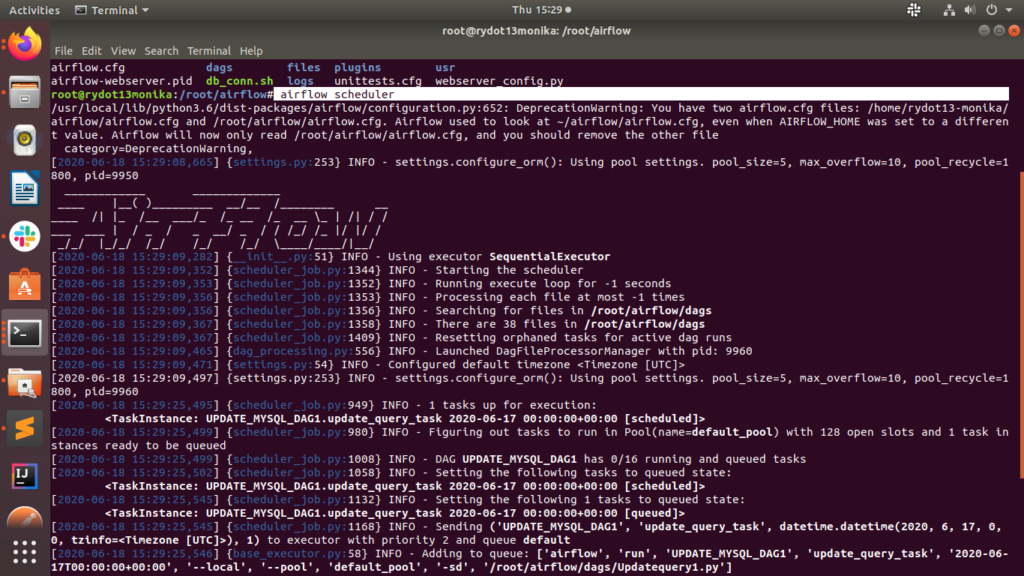

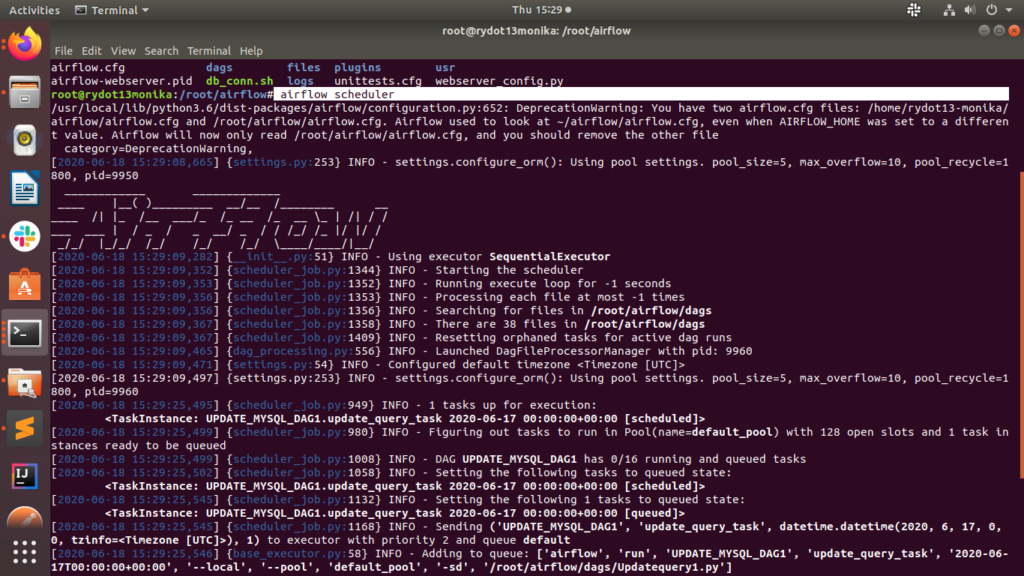

Workers start running by pulling jobs from the queue according to the run configuration. The primary scheduler searches the database for all jobs in the "scheduled" state and forwards them to the executor. These TaskInstances are assigned the status "scheduled" in the metadata database. The scheduler creates a TaskInstance for each Task in the DAG that needs to be performed. The scheduler parses the DAG file and generates the required DAG runs based on the schedule parameters. When the Airflow scheduler service is started, the scheduler first checks the DAGs folder and instantiates all DAG objects in the metadata database. Here is the general process followed by the Airflow scheduler: It is the source of truth of all metadata about DAGs and also stores statistics about runs that were performed. The Airflow scheduler modifies entries in the Airflow metadata database, which stores configurations such as variables, connections, user information, roles, and policies. Related content: Read our detailed guide to Airflow By default, the scheduler collects DAG analysis results every minute to see if an active Task can be triggered. Behind the scenes, the scheduler starts a child process that monitors all DAGs in the specified DAG directory and keeps them synchronized. The Airflow scheduler is a component that monitors all jobs and DAGs and triggers job instances when dependencies are complete. Workflows are represented as directed acyclic graphs (DAGs) and contain individual Tasks, which can be arranged into a complete workflow while taking into account the order of Task execution, retries, dependencies, and data flows.Ī Task in Airflow describes the work to be performed, such as importing data, performing analysis, or triggering other systems. It is commonly used to implement machine learning operations (MLOps) pipelines. Sql_alchemy_conn = postgresql+psycopg2://:5432/airflowĪpi_client = _clientĬelery_app_name = is a platform on which you can build and run workflows. When I try to schedule the jobs, scheduler is able to pick it up and queue the jobs which I could see on the UI but tasks are not running. I am new to airflow and trying to setup airflow to run ETL pipelines.

Workers start running by pulling jobs from the queue according to the run configuration. The primary scheduler searches the database for all jobs in the "scheduled" state and forwards them to the executor. These TaskInstances are assigned the status "scheduled" in the metadata database. The scheduler creates a TaskInstance for each Task in the DAG that needs to be performed. The scheduler parses the DAG file and generates the required DAG runs based on the schedule parameters. When the Airflow scheduler service is started, the scheduler first checks the DAGs folder and instantiates all DAG objects in the metadata database. Here is the general process followed by the Airflow scheduler: It is the source of truth of all metadata about DAGs and also stores statistics about runs that were performed. The Airflow scheduler modifies entries in the Airflow metadata database, which stores configurations such as variables, connections, user information, roles, and policies. Related content: Read our detailed guide to Airflow By default, the scheduler collects DAG analysis results every minute to see if an active Task can be triggered. Behind the scenes, the scheduler starts a child process that monitors all DAGs in the specified DAG directory and keeps them synchronized. The Airflow scheduler is a component that monitors all jobs and DAGs and triggers job instances when dependencies are complete. Workflows are represented as directed acyclic graphs (DAGs) and contain individual Tasks, which can be arranged into a complete workflow while taking into account the order of Task execution, retries, dependencies, and data flows.Ī Task in Airflow describes the work to be performed, such as importing data, performing analysis, or triggering other systems. It is commonly used to implement machine learning operations (MLOps) pipelines. Sql_alchemy_conn = postgresql+psycopg2://:5432/airflowĪpi_client = _clientĬelery_app_name = is a platform on which you can build and run workflows. When I try to schedule the jobs, scheduler is able to pick it up and queue the jobs which I could see on the UI but tasks are not running. I am new to airflow and trying to setup airflow to run ETL pipelines.

0 kommentar(er)

0 kommentar(er)